Machine learning has proven to be an effective tool to help authorities mitigate flood and bushfire threats and accelerate community recovery – by detecting damaged structures, optimising routing and improving resource allocation.

In this special interview, Esri’s Global Director of Artificial Intelligence, Omar Maher, answers questions from the ArcGIS user community on machine learning and how it can be used for disaster preparation and recovery.

Before you read the blog, check out Omar’s presentation on-demand and live Q&A to get the full background on machine learning for disaster response and recovery.

Q. How long might it take to build a deep learning training data set for locating and counting solar panels on residential rooftops?

A. I would break down this question further to ‘how much training data do we need to train deep learning models specifically for solar panels’? The answer depends on a couple of things. The more obvious the feature or the object you want to detect is, the less training data you will need. And the less diversified the versions of the object, the less training data is required.

Let’s say you’re interested in detecting three or four major types of solar panels – the more high-resolution imagery you have, the less training data you’ll need for the object. I would start with around three thousand training examples and use the image augmentation technique which we have in Python to generate an even wider variety of these.

The image augmentation takes your training data and applies different distortion techniques to it and rotations to help you generate around eight to ten thousand examples instead of three thousand. You can complete this in a couple of days using crowdsourcing or with a team of five or six working in parallel using the labelling app in ArcGIS Enterprise or ArcGIS Online to label the data.

Another example is building footprints in an area with a lot of building variation – you might need ten thousand training examples so that will require more time to complete. It will depend on how obvious the feature is and the resolution of the data. For solar panels, it might take a couple of days to generate good quality data as a starting point.

Q. What is your best practise advice for collating large deep learning training data sets?

A. Spend plenty of time collecting quality training data because if you don’t have good training data, you’re not going be able to train models that generate good accuracy. Try to have diversified examples – if you want to detect a specific object, such as solar panels, get different examples of each type of solar panel. If you want to detect tree species, you’ll need a broad variety of examples such as data from different cities, geographies, regions, and even different sensors if possible. If you’re going to run models across different imagery, try to take training data from this.

My second piece of advice is regarding hardware. I would generally recommend collating large training data sets in the cloud versus on premise because it’s easier to spin up powerful machines. Depending on the need, you can spin up different versions of virtual machines, choose different GPUs and more. If you’re going to do this locally, try to get a powerful GPU and a powerful machine – like the NVIDIA v100 – to do this kind of processing.

My third recommendation is to experiment with different types of neural networks because there’s no one size fits all – it’s about optimising the neural network to produce the best results. For example, if you want to detect objects, try different backbones. We support different neural networks and have Resnet with different depths. There are three versions supported right now so if you have ArcGIS Pro 2.5 or 2.6 with Image Analyst, you can play with different neural networks, optimise different parameters and see which is better.

Deep learning and machine learning is a very iterative process. You’ll need to keep collecting more training data, trying different networks and repeating until you have good results. Don’t give up!

Q. How do you scale models on an end-to-end AI life cycle?

A. Sometimes people want to run deep learning models across entire cities, states or even a country in a couple of minutes or hours instead of days and weeks. There are two things required for that – the first is GPU power and the second is how you parallelise the inference process.

I highly recommend good, powerful GPUs. Start at least with an NVIDIA RTX 2080, then upgrade to NVIDIA v100 for a more powerful GPU. Try to parallelise the inference by using distributed raster analytics with multiple image servers or multiple raster analytics servers. As you know, we have distributed analytics – raster analytics – one of which is deep learning.

As an example, we ran deep learning models to extract building footprints across a major city in the US. Doing this locally took us almost four-and-a-half hours but with four image servers it only took us 20 minutes. So, I would highly recommend a powerful GPU.

The second thing I recommend is using distributed raster analytics servers with ArcGIS Enterprise to scale the inference process.

Soon, we’re also going to allow training on multiple GPUs on the same machine.

Q. Here in Australia, a lot of the clients use the Amazon Web Services or Microsoft Azure Clouds – do you leverage clouds like that to distribute the image servers?

A. All the time. Most of the workflows demonstrated in my presentation were deployed in Microsoft Azure, or Amazon Web Servers. Technically you can do it on premise, but we recommend the cloud because you can use the virtual machines. In Azure, we use the data science virtual machine which has a type called the Deep Learning Virtual Machine that comes with GPUs that you can scale up and down elastically. We are doing the same thing with Amazon – so I recommend it if you want to scale these workflows.

Q. When determining tree species with 3D point cloud, are you able to share the percentage of species that might be successfully identified in this type of analysis?

A. I don’t have the specific percentage but out of five or six species, four of them had a 75% accuracy. I think this answer is very relative because it entirely depends on the species that you want to detect – or the user – and the amount of training data that you have available.

If you go to the Living Atlas, there is a PointCNN model that can detect trees from point cloud and is a good starting point. Try this model, see the kind of results it’s generating and keep fine-tuning it with more data. The Python API gives you the ability train or re-train models that are pre-trained with your new data. If you’re not getting good results keep adding more good data and try to optimise the neural network.

Q. What is the spec of your workstation?

A. The most important part of hardware requirements for deep learning is the GPU – no one size fits all but I can give you some guidelines. GPUs are the most important piece for both training and inference. There are different kinds and the higher the better but NVIDIA RTX 2080 TI would be a starting point. If you need something more, then you could try out the NVIDIA V100 GPU card. For RAM, I recommend at least 16GB or 52GB DVR RAM, and 500GB plus SSD for the hard disk drive.

Q. Is the deep learning model is embedded within ArcGIS Pro and ArcGIS Enterprise 10.6?

A. The deep learning model is not really embedded within ArcGIS Pro however you can download some of the models from the Living Atlas. There are three deep learning models available that you can download and play with – including the Building Footprint, which is one of the models that was used in detecting the damaged buildings after bushfires. It’s not embedded within ArcGIS Pro but it could be used in ArcGIS Pro or ArcGIS Enterprise – I recommend using it in ArcGIS Pro 2.6 but you can use ArcGIS Pro 2.5 or 2.4 as well. If you want to use it with ArcGIS Enterprise then I recommend either 10.7 or 10.8.

Q. Is there a training data repository/library that we can access?

A. No, but you can use data from the Living Atlas for similar purposes.

Q. What extensions are required to do point cloud CNN analysis?

A. No extensions are required, just 3D Analyst for pre/post-processing. You don’t need a licence, you can just use the PointCNN which comes with ArcGIS.learn (part of the Python API). This can be downloaded for free but will need to be executed against either ArcGIS Online or ArcGIS Enterprise.

Q. How does the point cloud training handle terrestrial LiDAR from both MLS and stationary LiDAR?

A. We are working on having Point Cloud training work against terrestrial LiDAR – this is still in development.

Q. Do deep learning models require custom configuration or are they useable out of the box?

A. The good news is that you don’t need to start from scratch. You can start with some of the deep learning models on the Living Atlas but we expect that you might need to optimise and enhance these models with data from the area that you are running them against. Try it out, if the results are not great, try to optimise and enhance with local data and take it from there.

Q. Can you use deep learning on images from image services?

A. Yes, deep learning can run against image services.

Q. Machine learning tasks typically use large amounts of data – how do you deal with moving such volumes of data into cloud environments (especially in poorly connected countries)?

A. You don’t necessarily need to use the cloud. The cloud is a convenient option but if you have connectivity issues just get a good GPU and work on premise. If you want to deal with massive amounts of data, the cloud is absolutely going to be more convenient. There is no simple answer for moving the data up to cloud in poorly connected countries. If these problems are consistent, I would highly recommend building a strong on-premise environment with RAMs, cores, GPUs and things like that.

Q. What was the accuracy of the damaged buildings assessment that you demonstrated?

A. It was around 80% position, 80% recall.

Q. Should you use Linux or Windows for Image Server?

You can use both.

Q. Are there any free tutorials available to learn more about this process?

There are lots of courses you can engage with including the course on Deep Learning for ArcGIS and the free course on using Deep Learning to Assess Palm Tree Health.

Q. Can you please link us some case studies or examples of how deep learning may assist or provide cost efficiencies in automatic vegetation classification.

The Coconut Palm Health in Tonga case study (located on the fourth tab on the page) is a good example of deep learning in this space.

Q. Is it possible to incrementally increase training samples (e.g. across different image dates/ time of year)

A. Yes, it’s possible. It’s highly recommended to retrain the model with additional data from different times. If you have a good model and want to make it better, you can look at more data and retrain it.

Q. To work on counting tree numbers in a specific area, does that require high resolution imagery as good training data?

I’d recommend trialling the model and seeing how it performs – especially if you have trained it against high resolution data. It should generally be able to detect things in lower resolution but the other way around might be a little challenging. If you trained it against lower resolution data and you want to detect against higher resolution data, that can be more difficult. Just keep in mind that deep learning in general is very incremental in the rate it processes – so try it out and if it’s not working, add more training data. That is absolutely better than starting from scratch.

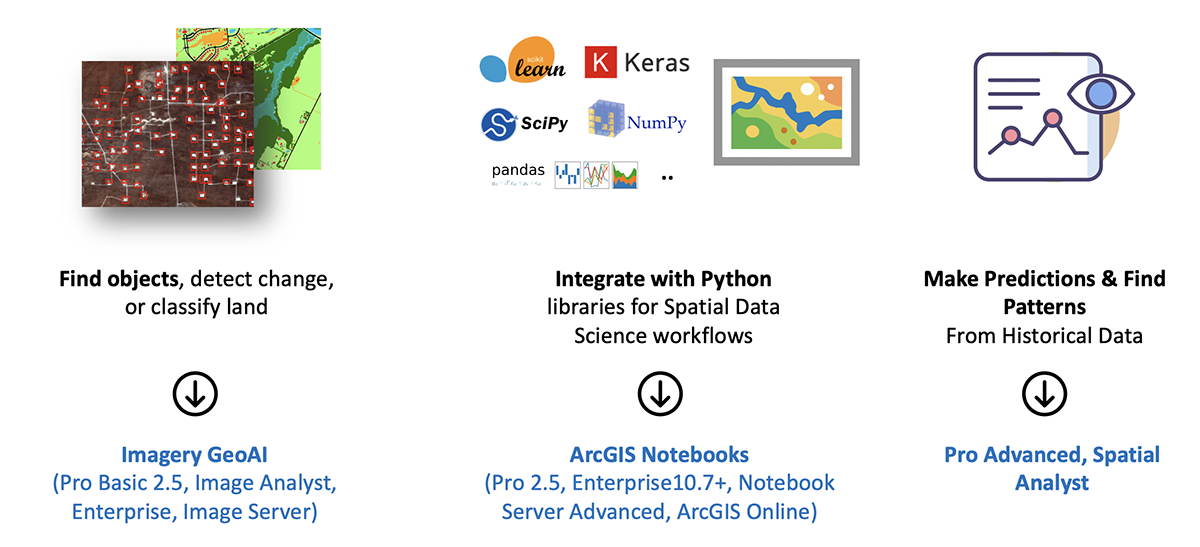

Q. What ArcGIS licences are required to use machine learning?

A. Machine learning is a horizontal capability taking advantage of different products in ArcGIS. For deep learning capabilities with imagery – similar to what I demonstrated in the presentation – you’ll need the ArcGIS Image Analyst extension with ArcGIS Pro 2.5 or 2.6. If you want to do this work locally and scale it, then you will need to use distributed raster analytics on ArcGIS Enterprise Server 10.7 and above with ArcGIS Image Server.

If you want to do the workflow I demonstrated with Point Cloud instead of imagery then you will need the ArcGIS 3D analyst extension to handle the 3D data and analytics. You can do deep learning locally or in ArcGIS Online Notebook – you can use the PointCNN which comes for free with the ArcGIS.learn Module and is part of the Python API.

Most of the machine learning tools in ArcGIS Pro – like predictions, geographically weighted regression or other tools on clustering – can be accessed on ArcGIS Pro Basic but for some of them you will need the ArcGIS Spatial Analyst extension.

Q. Do you need separate licences for the deep learning model or is this in-built within ArcGIS Pro?

A. You don’t need any licences for training a model and can do this through the Python API, and ArcGIS Notebooks in ArcGIS Pro 2.5. If you use or consume the model, you don’t need a licence for the model itself.

We have some pre-trained models publicly available that can be downloaded from the Living Atlas. I encourage you to go there and have a look – search for deep learning and you’ll find the free models (which we’ll be adding to).

If you want to do anything using the model against overhead imagery, you’re going to need the ArcGIS Spatial Analyst extension. You’ll need Image Server if you’re going to do this in server mode where you are executing against server – like in Jupyter Notebook.

You won’t need a specific licence if you want to do deep learning with vector or point cloud.

This blog is one of a four-part blog series with keynote presenters from Ozri 2020. Read the Q&As with Norman Disney and Young’s John Benstead, Esri’s Global Data Science Lead Lauren Bennett and Product Engineer Ankita Bakshi, and the New York State Police’s Kellen Crouse.

If you’d like more information on machine learning or artificial intelligence, call 1800 870 750 or submit an enquiry.